What's the deal with AI, anyway?

If you’re anything like me (read: a non-tech person in the tech world), you’re constantly faced with new technologies and their terminologies that initially make absolutely no sense to you. With technology developing so quickly, there’s always a new buzzword that requires you to learn what the hell it means and understand how that technology actually works.

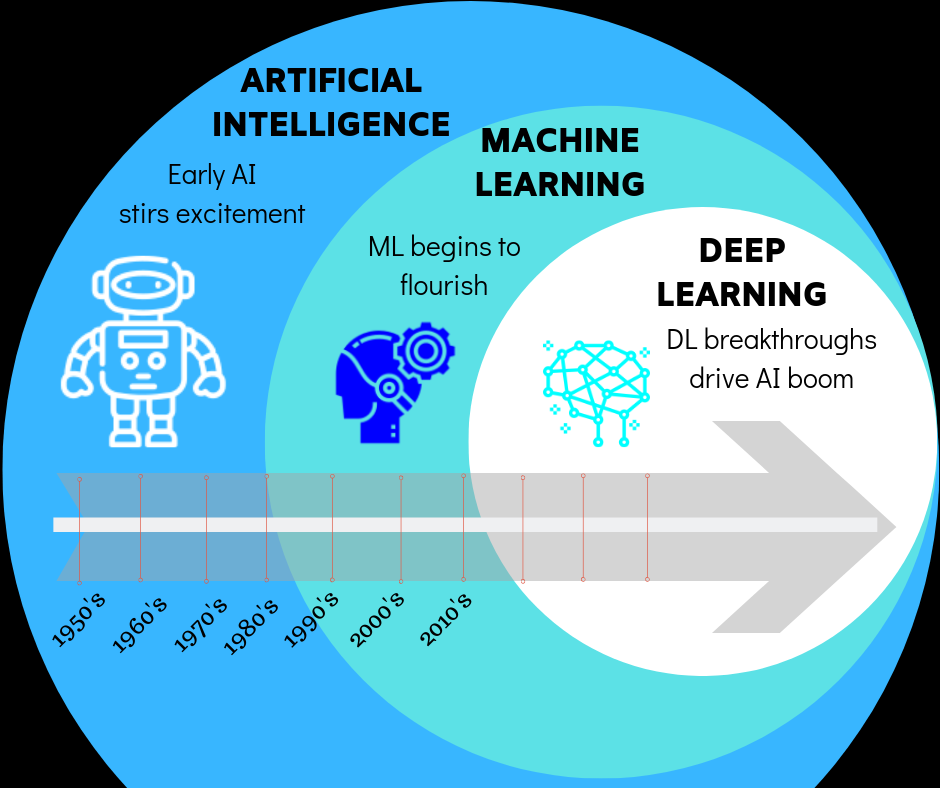

Lately, artificial intelligence is everywhere! AI, machine learning, deep learning… I’m sure you’ve stumbled upon these, but what exactly do they mean and are they all the same? And what exactly can they do?

Artificial intelligence

Well, basically, all those terms are interconnected and are all about machines doing stuff instead of humans. And yes, you’re probably thinking, ‘No shit, Sherlock!’

Ok, ok, bear with me! Artificial intelligence is a term that’s been around since the 1950s when a group of scientists started an idea that we’ve so far mostly seen only in movies. Throughout the decades since then, AI has both been hailed as the most important technology, as well as the biggest science fiction of our lives. And, depending on the moment in time, it was both.

Namely, due to high expectations, AI went through the waves of huge excitement and a huge disappointment. And after each disappointment, it would enter the so-called AI winter, when people would practically give up on AI since the seemingly endless investments of both time and money prior to it produced no real results.

But every now and then, some new breakthrough that would awe the public would happen. So, please allow me to take you through those moments by using — games — as examples. And if you’re wondering why games, let’s just say it’s for consistency purposes. It’ll make sense, I promise!

Ok, let’s go back in time a bit. Remember 1997, when IBM’s Deep Blue supercomputer beat Garry Kasparov in chess? That was the first time ever that a computer outperformed a human champion, and it was considered as a sign that machines were catching up to human intelligence.

However, in more than a decade since that event, nothing really happened. Until 2011, when another machine beat humans, this time in the game of Jeopardy. It was again IBM’s supercomputer, this one named Watson, that defeated two of Jeopardy’s greatest champions. And it actually took 5 years of building a machine capable of doing it, even though it was initially just for the demonstration purposes. At the time, IBM had no idea if they’ll be able to commercialize the project.

This event proved to be a more realistic sign that significant movement is happening in the field of AI, and over the past few years, especially since 2015, AI has exploded. Why now, you ask, and not before? Well, we’ve reached the moment in time when two things overlapped — we now have:

enough processing power to crunch…

… the virtually infinite amount of data we’re now producing every day, which is (or better said, was, at least in May 2018) 2.5 quintillion (a.k.a. 2.5 with 17 zeros… yes, you read that right!) bytes of data! And that number is only ever increasing by the development of IoT (Internet of Things). Just imagine, over 90% of the data in the world was generated in the last two years. Only two! TWO! 2!!!

Machine learning

But ok, I’m getting ahead of myself. I wanted to explain the difference between AI (Artificial intelligence), ML (Machine learning), and DL (Deep learning) first.

So, as I’ve mentioned, AI as a term was born when some scientists dreamt of us having machines that possess the same intelligence as humans. That is something we now know as a ‘General AI’ and the Terminator is probably the first thing that comes to people’s minds when they think of it.

Luckily, we’re still far away from the killer robots that will wipe us off of the face of the Earth (although we should be aware of it as a possible future scenario and its implications), as the machines today can outperform humans in only very narrow fields. What we have nowadays is the so-called ‘Narrow Artificial Intelligence’, as technology, today can beat humans in very specific tasks, usually those that involve images or numbers.

This ‘superiority’ of machines started happening with the ‘help’ of Machine learning (ML), which is, in layman terms, an approach to achieving artificial intelligence. Until ML was thought of, the AI experts tried to code everything manually, trying to think of every possible scenario and code a specific set of instructions for each one. Yes, it was as impossible as it sounds. So they realized they needed to ‘teach’ the machines to do that work themselves, and have come up with the algorithms that parse data fed into the machine, ‘learn’ from it (e.g. figure out patterns in the historical data) and then use it to predict what the next step would be.

However, even that approach was quite limiting for a long time, and it still required a lot of interventions and hand coding. But the desire to improve persevered, and with the help of time, better learning algorithms appeared. And thus Deep learning (DL) was created, which is essentially a subset of machine learning, a technique for implementing it.

Deep learning

The real ‘culprit’ for the recent boom of AI is the result of the idea for algorithms to imitate the functioning of the human brain. And even that idea is nothing new, it was around since the beginning of AI itself, but impossible to put into practice as it requires enormous computing power. And then the GPUs (Graphics Processing Units), previously appreciated only by the gaming junkies, became popular. And the rest is history.

Deep learning uses brain-inspired algorithms, the so-called neural networks, to simulate the learning process. We might still not fully knowhow the human brain works, but that’s not stopping us from imitating it. And it seems to be working as, among ML algorithms, deep learning has become the most promising approach to AI, and it has already broken many existing AI records.

Computers beating human champions in chess and Jeopardy was definitely impressive at the time, and yet it looks so trivial nowadays. Since then, many other games were conquered by the machines. In March 2016, Google DeepMind’s AlphaGo beat the highest ranking Go player, Lee Sedol, in a five-game match. Go is considered to be much more complex than chess, and hence much more difficult for computers to win it, due to its much larger branching factor (as in, many more possible moves than in the chess).

Then, at the mere beginning of 2017, the almost unthinkable happened — a machine called Libratus has beaten four of the world’s best poker players in a tournament. What’s so special about this, you wonder? Well, poker is even more difficult than chess or Go because it’s a game of imperfect information.Not only do the opponents *not* see other players’ cards (unlike the board games where everything is out in the open), but more importantly, it’s a game of bluffing. In other words, the computer had to interpret the misleading information, which was considered to be a solely human trait.

AI breakthroughs demonstrated through the games, or better said, through computers beating humans in the games, started to happen more and more often. In June 2017, Dota 2 professional human players were on their knees, this time defeated by the OpenAI’s bots. What was remarkable here was that the AI bots had to play in a team of five, requiring a lot of collaboration and coordination, plus they learned everything by themselves. Basically, they learned to play the game by playing against themselves, and learned by playing 180 years worth of game *each day*!

The same technique called Reinforcement learning was used to ‘upgrade’ the aforementioned AlphaGo and announce the AlphaGo Zero version in October 2017. This one learned to play the game also by playing games against itself, without any human data and exceeded all the old versions in only 40 days. Only two months later, another updated version, AlphaZero was released, and this one won 60 and lost 40 games against AlphaGo Zero after only 34 hours of self-learning of Go and playing against AlphaGo Zero. A bit scary, isn’t it?

So, is there anything that AI cannot do, you ask? And you’re not alone there, many researchers are looking for the next big challenge for AI, and it seems that several peeps from the Alphabet-owned DeepMind Technologies and the University of Oxford have found it — a game called Hanabi. If you’ve never heard of it, you’re not alone there either; the game’s been around for only a decade. And what makes it such a special challenge is that it requires a lot of communication between players, a theory of mind, and cooperation, so it will be interesting to observe how the machines deal with that.

AI/ML/DL

So, to go back to the beginning… in short (says she after so much text), the easiest way to envision the relationship between artificial intelligence, machine learning, and deep learning would be to think of them as concentric circles, with AI being the biggest one, ML within it, and DL within that one.

So, there you go, just like I said right away, all these terms are interconnected and about machines doing stuff instead of humans.

AI in RL

Ok, as mentioned at the beginning, those examples were all fun and games. Literally! But what are some practical applications of AI? How can it be used in ‘real life’, and how can you and I benefit from it?

Now, since AI has infiltrated so many areas already and is present in a number of domains, that’s a topic for a whole new article. Stay tuned!

p.s. originally published on ZASTI’s Medium blog